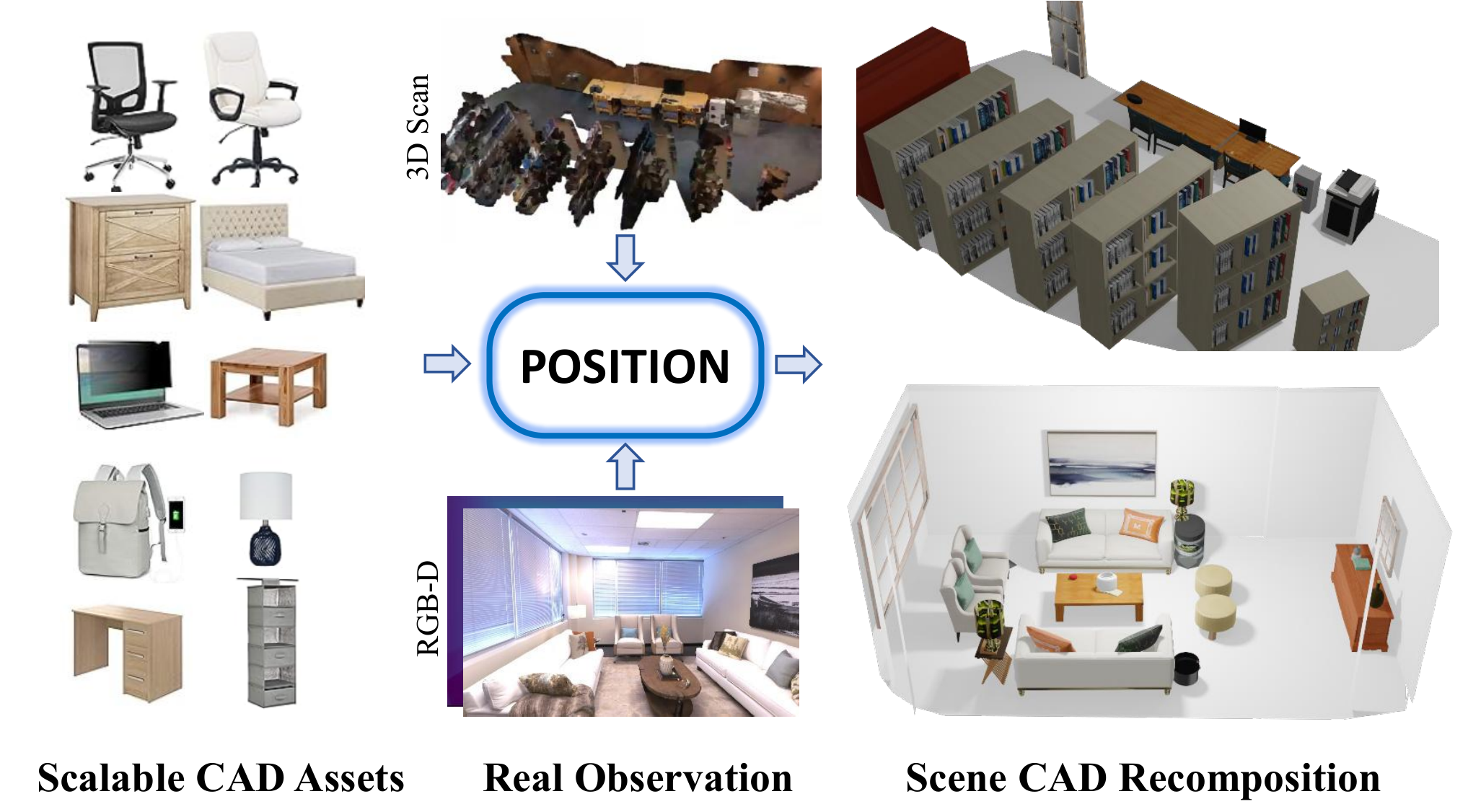

Our open-world scene CAD recomposition method POSITION, which takes the captured real 3D scene data as its input, understands the scene semantic spatial layout, and retrieves CAD model from scalable assets to recomposite a high-fidelity 3D scene.

3D scene CAD recomposition aims to reconstruct a given scene by retrieving and assembling CAD models from a database, so as to accurately simulate the geometric properties and spatial arrangement of the original environment. Recent methods learn this task through training on limited scan-to-CAD annotation data, which hinders their generalization to diverse real-world scenes. In this paper, we propose POSITION, an open-world 3D scene CAD recomposition method to construct the 3D scene with CADs retrieved from an open-set database. POSITION is designed following a divide-and-conquer strategy. Firstly, we extract open-world multi-modal object representations from a captured 3D scene. Secondly, on top of the representations, we propose a coarse-to-fine retrieval method to retrieve CADs that are visually, geometrically and semantically match real objects. Thirdly, we present a physically plausible pose alignment method to adjust retrieved CAD models to maintain consistent geometry and layout with the observation. By decomposing the problem into well-defined subtasks, our approach achieves generalization across various scene types and scalable CAD databases without retraining or fine-tuning. Our approach demonstrates superior CAD recomposition performance on both the Scan2CAD and diverse real-world 3D scene datasets.

Method Overview. The captured 3D scene data is first analyzed by an ensemble of state-of-the-art open-world scene understanding models. Subsequently, the identified multi-modal instances undergo individual retrieval processing followed by a joint retrieval refinement stage. Finally, the retrieved CAD models are aligned to the targets in the scene with physically plausible pose optimization.

1. Simulated Scene for Articulated Interaction

2. Simulated Scene for Embodied Navigation

3. POSITION integrates with HunYuan3D